DRAFT

Project Overview¶

- Goal: Help CharityML maximize the likelihood of receiving dontations

How: Construct a model that predicts whether an individual makes more than 50k/yr, a value associated with being a candidate for giving donations

Data Source: 1994 US Census Data UCI Machine Learning Repository

Note: Datset donated by Ron Kohavi and Barry Becker, from the article "Scaling Up the Accuracy of Naive-Bayes Classifiers: A Decision-Tree Hybrid". Small changes to the dataset have been made, such as removing the 'fnlwgt' feature and records with missing or ill-formatted entries.

Table of Contents:¶

1.1 Data Dictionary

1.2 Simple Cleaning

1.3 Summary Statistics

1.4 Distributions

1.5 Skew and Variance

1.6 Relationships

2.1 Separate Labels from Factors

2.2 Transformation2.2.1 Indicator Variables

2.2.2 Impact

2.2.3 Logarithmic Transform

2.2.4 Normalization and Standardization2.4 Pipeline

3.Metrics

3.1 Accuracy

3.2 Precision

3.3 Recall

3.4 F$\beta$-Score

4.Models

4.1 Selection

4.2.1 Application

4.3 Model Application Pipeline

4.4.1 Application

4.4.2 Tuning4.5 Random Forest

4.5.1 Application

4.5.2 Tuning4.6 Ada Boost

4.6.1 Application

4.6.2 Tuning4.7 Gradient Boost

4.7.1 Application

4.7.2 Tuning4.8.1 Application

4.8.2 Tuning4.9.1 Application

4.9.2 Tuning4.10 Comparison

4.10.1 Feature Importance

4.10.2 Selection

4.10.3 Comp: Reduced Feature Model Performance

5.Summary

1.1 EDA: Data Dictionary¶

- age: continuous.

- workclass: Private, Self-emp-not-inc, Self-emp-inc, Federal-gov, Local-gov, State-gov, Without-pay, Never-worked.

- education_level: Bachelors, Some-college, 11th, HS-grad, Prof-school, Assoc-acdm, Assoc-voc, 9th, 7th-8th, 12th, Masters, 1st-4th, 10th, Doctorate, 5th-6th, Preschool.

- education-num: continuous.

- marital-status: Married-civ-spouse, Divorced, Never-married, Separated, Widowed, Married-spouse-absent, Married-AF-spouse.

- occupation: Tech-support, Craft-repair, Other-service, Sales, Exec-managerial, Prof-specialty, Handlers-cleaners, Machine-op-inspct, Adm-clerical, Farming-fishing, Transport-moving, Priv-house-serv, Protective-serv, Armed-Forces.

- relationship: Wife, Own-child, Husband, Not-in-family, Other-relative, Unmarried.

- race: Black, White, Asian-Pac-Islander, Amer-Indian-Eskimo, Other.

- sex: Female, Male.

- capital-gain: continuous.

- capital-loss: continuous.

- hours_per-week: continuous.

- native-country: United-States, Cambodia, England, Puerto-Rico, Canada, Germany, Outlying-US(Guam-USVI-etc), India, Japan, Greece, South, China, Cuba, Iran, Honduras, Philippines, Italy, Poland, Jamaica, Vietnam, Mexico, Portugal, Ireland, France, Dominican-Republic, Laos, Ecuador, Taiwan, Haiti, Columbia, Hungary, Guatemala, Nicaragua, Scotland, Thailand, Yugoslavia, El-Salvador, Trinadad&Tobago, Peru, Hong, Holand-Netherlands.

1.2 EDA: Simple Cleaning and Engineering¶

Standardizing factor names by PEP8 Naming Convention Standards can be helpful.

There are a number of categorical variables. Handling those with one-hot encoding can be helpful.

<class 'pandas.core.frame.DataFrame'> RangeIndex: 45222 entries, 0 to 45221 Data columns (total 14 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 age 45222 non-null int64 1 workclass 45222 non-null object 2 education_level 45222 non-null object 3 education_num 45222 non-null float64 4 marital_status 45222 non-null object 5 occupation 45222 non-null object 6 relationship 45222 non-null object 7 race 45222 non-null object 8 sex 45222 non-null object 9 capital_gain 45222 non-null float64 10 capital_loss 45222 non-null float64 11 hours_per_week 45222 non-null float64 12 native_country 45222 non-null object 13 income 45222 non-null object dtypes: float64(4), int64(1), object(9) memory usage: 4.8+ MB

| count | unique | top | freq | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| age | 45222 | NaN | NaN | NaN | 38.5479 | 13.2179 | 17 | 28 | 37 | 47 | 90 |

| workclass | 45222 | 7 | Private | 33307 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| education_level | 45222 | 16 | HS-grad | 14783 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| education_num | 45222 | NaN | NaN | NaN | 10.1185 | 2.55288 | 1 | 9 | 10 | 13 | 16 |

| marital_status | 45222 | 7 | Married-civ-spouse | 21055 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| occupation | 45222 | 14 | Craft-repair | 6020 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| relationship | 45222 | 6 | Husband | 18666 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| race | 45222 | 5 | White | 38903 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| sex | 45222 | 2 | Male | 30527 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| capital_gain | 45222 | NaN | NaN | NaN | 1101.43 | 7506.43 | 0 | 0 | 0 | 0 | 99999 |

| capital_loss | 45222 | NaN | NaN | NaN | 88.5954 | 404.956 | 0 | 0 | 0 | 0 | 4356 |

| hours_per_week | 45222 | NaN | NaN | NaN | 40.938 | 12.0075 | 1 | 40 | 40 | 45 | 99 |

| native_country | 45222 | 41 | United-States | 41292 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| income | 45222 | 2 | <=50K | 34014 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

Number of observations: 45222 Number of people with income > 50k: 11208 Number of people with income <= 50k: 34014 Percent of people with income > 50k: 24.78

1.5 EDA: Skew and Variance¶

The features capital_gain and capital_loss are positively skewed (i.e. have a long tail in the positive direction).

To reduce this skew, a logarithmic transformation, $\tilde x = \ln\left(x\right)$, can be applied. This transformation will reduce the amount of variance and pull the mean closer to the center of the distribution.

Why does this matter: The extreme points may affect the performance of the predictive model.

Why care: We want an easily discernible relationship between the independent and dependent variables; the skew makes that more complicated.

Why DOESN'T this matter: The distribution of the independent variables is not an assumption of most models, but the distribution of the residuals and homoskedasticity of the independent variable, given the independent variables, $E\left(u | x\right) = 0$ where $u = Y - \hat{Y}$ is of linear regression. In this analysis, the dependent variable is categorical (i.e. discrete or non-continuous) and linear regression is not an appropriate model.

| Feature | Skewness | Mean | Variance | |

|---|---|---|---|---|

| 0 | Capital Loss | 4.516154 | 88.595418 | 1.639858e+05 |

| 1 | Capital Gain | 11.788611 | 1101.430344 | 5.634525e+07 |

1.6 EDA: Relationships¶

Toward determing what factors should be included in the model, there is something to note with regard to categorical versus continuous variables.

Correlation is defined as: $$r = \frac{\sum\left(X-\bar{X}\right)\cdot\left(Y-\bar{Y}\right)}{\sqrt{(\sum\left(X-\bar{X}\right)^{2})}\cdot\sqrt{\sum\left(Y-\bar{Y}\right)^{2}}}$$

This is inconsistent with categorical variables. Instead, it can be useful to utilize the uncertainty coefficient, or Thiel's Index.

Where we have entropy of a single distribution:

$$H\left(X\right)=-\sum_{x} P_{x}\left(x\right)log\ P_{x}\left(x\right)$$Conditional entropy as:

$$H\left(X|Y\right) = - \sum_{x,y} P_{X,Y}\left(x,y\right)log\ P_{X|Y}\left(x|y\right)$$and the uncertainty coefficient as:

$$U\left(X|Y\right)=\frac{H\left(x\right)-H\left(X|Y\right)}{H\left(X\right)} = \frac{I\left(X;Y\right)}{H\left(X\right)}$$Where $I\left(X;Y\right)$ is the mutual information, or the amount of information obtained about one random variable through observing the other random variable.

To quote Shaked Zychlinski, "given the value of x, how many possible states does y have, and how often do they occur".

So, can this help us discenr some information about what to do with our factors?

I will step forward now with the idea that colinearity, where one variable can easily be derived from another within the model, is not desired (i.e. two variables with strong relationships on one another should not be included as they may reduce the predictive power of the model).

Citation: Shaked Zychlinski

Notable relationships¶

A model including:

ageandmarital_status(0.56)age&incomeis 0.24marital_status&incomeis 0.20

dropmarital_status

ageandrelationship(0.46)ageandincomeis 0.24relationshipandincomeis 0.21- drop

relationship

education_numandoccupation(0.57)education_numandincomeis 0.33occupationandincomeis 0.11- drop

occupation

marital_statusandrelationship(0.49)- already determined that

marital_statusandrelationshipwould be dropped from model

- already determined that

2. Data Engineering¶

2.1 Separate Labels from Factors

2.2 Transformation

2.2.1 Indicator Variables

2.2.2 Logarithmic Transform

2.2.3 Impact

2.2.4 Normalization and Standardization

2.1 DE: Separate Labels from Factors¶

For training an algorithm, it is useful to separate the label, or dependent variable ($Y$) from the rest of the data training_features, or independent variables ($X$).

2.2.1 DE: Indicator Variables¶

A common way to handle categorical variables is to make indicator, or dummy, variables from the values of the factors.

Pandas has a simple method, .get_dummies(), that can perform this very quickly.

Further, this will create a new variable for every value a categorical variable takes as demonstrated in this example:

| someFeature | someFeature_A | someFeature_B | someFeature_C | ||

|---|---|---|---|---|---|

| 0 | B | 0 | 1 | 0 | |

| 1 | C | ----> one-hot encode ----> | 0 | 0 | 1 |

| 2 | A | 1 | 0 | 0 |

Which means the p, or number of factors, will grow, and can do so potentially in a large way. Specifically, if p is the number of factors and pI is the number of factors after creating indicator variables:

$$pI = p + \left(number\ of\ distinct\ categories\right) \cdot \left(number\ of\ categorical\ variables\right)$$

It is also worth noting that for modeling, it is important that once value of the factor, a "base case", be dropped from the data. This is because the base case is redundant, i.e. can be infered perfectly from the other cases, and, more specifically and more detrimental to our model, it leads to multicollinearity of the terms.

In some models (e.g. logistic regression, linear regression), an assumption of no multicollinearity must hold.

So, the final number of factors after creating indicator variables and dropping the base case is: $$\tilde{p}=pI - \left(number\ of\ categorical\ variables\right)$$

13 total features before one-hot encoding. 95 total features after one-hot encoding.

2.2.2 DE: Logarithmic Transform¶

To reduce skew, a logarithmic transformation, $\tilde x = \ln\left(x\right)$, can be applied. This transformation will reduce the amount of variance and pull the mean closer to the center of the distribution.

The logarithmic transformation reduced the skew and the variance of each factor.

| Feature | Skewness | Mean | Variance |

|---|---|---|---|

| Capital Loss | 4.516154 | 88.595418 | 163985.81018 |

| Capital Gain | 11.788611 | 1101.430344 | 56345246.60482 |

| Log Capital Loss | 4.271053 | 0.355489 | 2.54688 |

| Log Capital Gain | 3.082284 | 0.740759 | 6.08362 |

| Feature | Skewness | Mean | Variance | |

|---|---|---|---|---|

| 0 | Capital Loss | 4.516154 | 88.595418 | 163985.81018 |

| 1 | Capital Gain | 11.788611 | 1101.430344 | 56345246.60482 |

| 2 | Log Capital Loss | 4.271053 | 0.355489 | 2.54688 |

| 3 | Log Capital Gain | 3.082284 | 0.740759 | 6.08362 |

2.2.3 DE: Impact¶

Originally, the influence of capital_loss on income was statistically significant, but after the logarithmic transformation, it is not.

Here it can be seen that with a change to the skew, the confidence interval now passes through zero whereas before it did not.

This passing through zero is interpreted as the independent variable being statistically indistinguishable from zero influence on the dependent variable.

Original model

Results: Logit

=================================================================

Model: Logit Pseudo R-squared: -0.238

Dependent Variable: income AIC: 62678.9084

Date: 2020-05-13 20:37 BIC: 62687.6278

No. Observations: 45222 Log-Likelihood: -31338.

Df Model: 0 LL-Null: -25322.

Df Residuals: 45221 LLR p-value: nan

Converged: 1.0000 Scale: 1.0000

No. Iterations: 3.0000

------------------------------------------------------------------

Coef. Std.Err. z P>|z| [0.025 0.975]

------------------------------------------------------------------

capital_loss 0.0001 0.0000 3.7473 0.0002 0.0000 0.0001

=================================================================

Transformed model

Results: Logit

=================================================================

Model: Logit Pseudo R-squared: -0.238

Dependent Variable: income AIC: 62690.3061

Date: 2020-05-13 20:37 BIC: 62699.0254

No. Observations: 45222 Log-Likelihood: -31344.

Df Model: 0 LL-Null: -25322.

Df Residuals: 45221 LLR p-value: nan

Converged: 1.0000 Scale: 1.0000

No. Iterations: 3.0000

------------------------------------------------------------------

Coef. Std.Err. z P>|z| [0.025 0.975]

------------------------------------------------------------------

capital_loss 0.0095 0.0058 1.6419 0.1006 -0.0018 0.0207

=================================================================

2.2.4 DE: Normalization and Standardization¶

These two terms, normalization and standardization, are frequently used interchangably, but have two different scaling purposes.

- Normalization: scale values between 0 and 1

- Standardization: transform data to follow a normal distribution, i.e. $X \sim N\left(\mu=0,\sigma ^{2}=1\right)$

Earlier, capital_gain and capital_loss were transformed logarithmically, reducing their skew, and affecting the model's predictive power (i.e. ability to discern the relationship between the dependent and independent variables).

Another method of influencing the model's predictive power is normalization of independent variables which are numerical. Whereafter, each featured will be treated equally in the model.

However, after scaling is applied, observing the data in its raw form will no longer have the same meaning as before.

Note the output from scaling. age is no longer 39 but is instead 0.30137. This value is meaningful only in context of the rest of the data and not on its own.

Original Data

| age | education_num | capital_gain | capital_loss | hours_per_week | workclass_ Local-gov | workclass_ Private | workclass_ Self-emp-inc | workclass_ Self-emp-not-inc | workclass_ State-gov | ... | native_country_ Portugal | native_country_ Puerto-Rico | native_country_ Scotland | native_country_ South | native_country_ Taiwan | native_country_ Thailand | native_country_ Trinadad&Tobago | native_country_ United-States | native_country_ Vietnam | native_country_ Yugoslavia | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 39 | 13.0 | 2174.0 | 0.0 | 40.0 | 0 | 0 | 0 | 0 | 1 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

1 rows × 95 columns

====================================================================================== Scaled Data

| age | education_num | capital_gain | capital_loss | hours_per_week | workclass_ Local-gov | workclass_ Private | workclass_ Self-emp-inc | workclass_ Self-emp-not-inc | workclass_ State-gov | ... | native_country_ Portugal | native_country_ Puerto-Rico | native_country_ Scotland | native_country_ South | native_country_ Taiwan | native_country_ Thailand | native_country_ Trinadad&Tobago | native_country_ United-States | native_country_ Vietnam | native_country_ Yugoslavia | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.30137 | 0.8 | 0.667492 | 0.0 | 0.397959 | 0 | 0 | 0 | 0 | 1 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

1 rows × 95 columns

2.3 DE: Shuffling and Splitting¶

After transforming with one-hot-encoding, all categorical variables have been converted into numerical features. Earlier, they were normalized (i.e. scaled between 0 and 1).

Next, for training a machine learning model, it is necessary to split the data into segments. One segment will be used for training the model, the training set, and the other set will be for testing the mode, the testing set.

A common method of splitting is to segment based on proportion of data. A general 80:20 rule is typical for training:test.

sklearn has a method that works well for this, .model_selection.train_test_split. Essentially, this randomly selects a portion of the data to segment to a training and to a testing set.

random_state: By setting a seed, optionrandom_state, we can ensure the random splitting is the same for our model. This is necessary for evaluating the effectiveness of the model. Otherwise, we would be training and testing a model with the same proportional split (if we kept that static), but with different observations of the data.test_size: This setting represents the proportion of the data to be tested. Generally, this is the complement (1 - x = c) of thetraining_size. For example, iftest_sizeis0.2, thetest_sizeis0.8.stratify: Preserves the proportion of the label class in the split data. As an example, let1and0indicate the positive and negative cases of a label, respectively. It's possible that only positive or only negative classes exisst in either training or testing set (e.g. $\forall y \in Y_{train}, y = 1$). Better than avoid this worst case scenario,stratifywill preserve the ratio of positive to negative classes in each training and testing set.

Here the data is split 80:20 with a seed set of 0 and the distribution of the label's classes preserved:

Original ratio of positive-to-negative classes: 0.33 Training ratio of positive-to-negative classes: 0.33 Testing ratio of positive-to-negative classes: 0.33

Number of Factors without removing high associations: 95 Number of Factors after removing high associations: 71 Reduced by: 24

Transformed data is equivalent in steps and pipeline: True X_train is equivalent in steps and pipeline: True X_test is equivalent in steps and pipeline: True y_train is equivalent in steps and pipeline: True y_test is equivalent in steps and pipeline: True

3. Metrics¶

3.1 Accuracy

3.2 Precision

3.3 Recall

3.4 F$\beta$-Score

In terms of income as a predictor for donating, CharityML has stated they will most likely receive a donation from individuals whose income is in excess of 50,000/yr.

CharityML has limited funds to reach out to potential donors. Misclassifying a person as making more than 50,000yr is COSTLY for CharityML. It's more important that the model accurately predicts a person making more than 50,000/yr (i.e. true-positive) than accidentally predicting they do when they don't (i.e. false-positive).

3.1 Met: Accuracy¶

Accuracy is a measure of the correctly predicted data points to total amount of data points:

$$Accuracy=\frac{\sum Correctly\ Classified\ Points}{\sum All\ Points}=\frac{\sum True\ Positives + \sum True\ Negatives}{\sum Observations}$$A Confusion Matrix demonstrates what a true/false positive/negative is:

| Predict 1 | Predict 0 | |

|---|---|---|

| True 1 | True Positive | False Negative |

| True 0 | False Positive | True Negative |

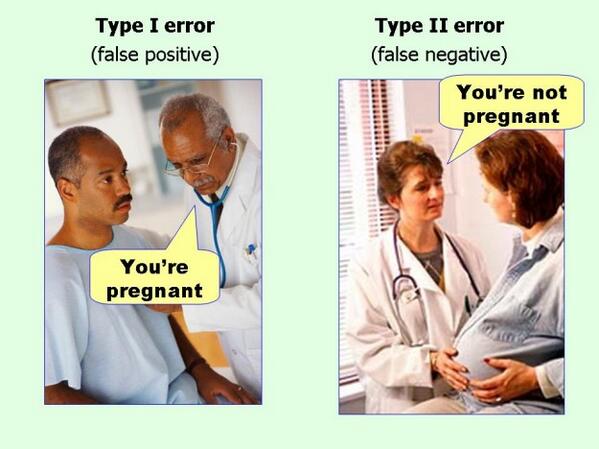

The errors of these are sometimes refered to as type errors:

| Predict 1 | Predict 0 | |

|---|---|---|

| True 1 | True Positive | Type 2 Error |

| True 0 | Type 1 Error | True Negative |

- Type 1: a positive class is predicted for a negative class (false positive)

- Type 2: a negative class is predicted for a positive class (false negative)

For this analysis, we want to avoid false positives or type 1 errors. Put differently, we prefer false negatives to false positives.

A model that meets that criteria, $False\ Negative \succ False\ Positive$, is known as preferring precision over recall, or is a high precision model.

Humorously and perhaps more understandably, these type errors can be demonstrate as such:

3.2 Met: Precision¶

Precision is a measure of the amount of correctly predicted positive class to the amount of positive class predictions (correct as well as incorrect predictions of positive class):

$$Precision = \frac{\sum True\ Positives}{\sum True\ Positives + \sum False\ Positives}$$A model which avoids false positives would have a high precision value, or score. It may also be skewed toward false negatives.

3.3 Met:Recall¶

Recall, sometimes refered to as a model's sensitivity, is a measure of the correctly predicted positive classes to the actual amount of positive classes (true positive and false negatives are each actual positive classes):

$$Recall = \frac{\sum True\ Positives}{\sum Actual\ Positives} = \frac{\sum True\ Positives}{\sum True\ Positives + \sum False\ Negatives}$$A mode which avoids false negatives would have a high recall value, or score. It may also be skewed toward false positives

3.4 Met: F-$\beta$ Score¶

An F-$\beta$ Score is a method of scoring a model both on precision and recall.

Where $\beta \in [0,\infty)$:

$$F_{\beta} = \left(1+\beta^{2}\right) \cdot \frac{Precision\ \cdot Recall}{\beta^{2} \cdot Precision + Recall}$$When $\beta = 0$, we get precision: $$F_{\beta=0} = \left(1+0^{2}\right) \cdot \frac{Precision\ \cdot Recall}{0^{2} \cdot Precision + Recall} = \left(1\right) \cdot \frac{Precision\ \cdot Recall}{Recall} = Precision$$

When $\beta = 1$, we get a harmonized mean of precision and recall:

$$F_{\beta=1} = \left(1+1^{2}\right) \cdot \frac{Precision\ \cdot Recall}{1^{2} \cdot Precision + Recall} = \left(2\right) \cdot \frac{Precision\ \cdot Recall}{Precision + Recall}$$- Note: $Harmonic\ Mean = \frac{2xy}{x + y}$

... and when $\beta > 1$, we get something closer to recall:

$$F_{\beta \rightarrow \infty} = \left(1+\beta^{2}\right) \cdot \frac{Precision\ \cdot Recall}{\beta^{2} \cdot Precision + Recall} = \frac{Precision\ \cdot Recall}{\frac{\beta^{2}}{1+\beta^{2}} \cdot Precision + \frac{1}{1+ \beta^{2}} \cdot Recall}$$As $\beta \rightarrow \infty$: $$\frac{Precision\ \cdot Recall}{\frac{\beta^{2}}{1+\beta^{2}} \cdot Precision + \frac{1}{1+ \beta^{2}} \cdot Recall} \rightarrow \frac{Precision \cdot Recall}{1 \cdot Precision + 0 \cdot Recall} = \frac{Precision}{Precision} \cdot Recall = Recall$$

4. Models¶

4.1 Selection

4.2.1 Application

4.3 Model Application Pipeline

4.4.1 Application

4.4.2 Tuning4.5 Random Forest

4.5.1 Application

4.5.2 Tuning4.6 Ada Boost

4.6.1 Application

4.6.2 Tuning4.7 Gradient Boost

4.7.1 Application

4.7.2 Tuning4.8.1 Application

4.8.2 Tuning4.9.1 Application

4.9.2 Tuning4.10 Comparison

4.10.1 Feature Importance

4.10.2 Selection

4.1 Mod: Selection¶

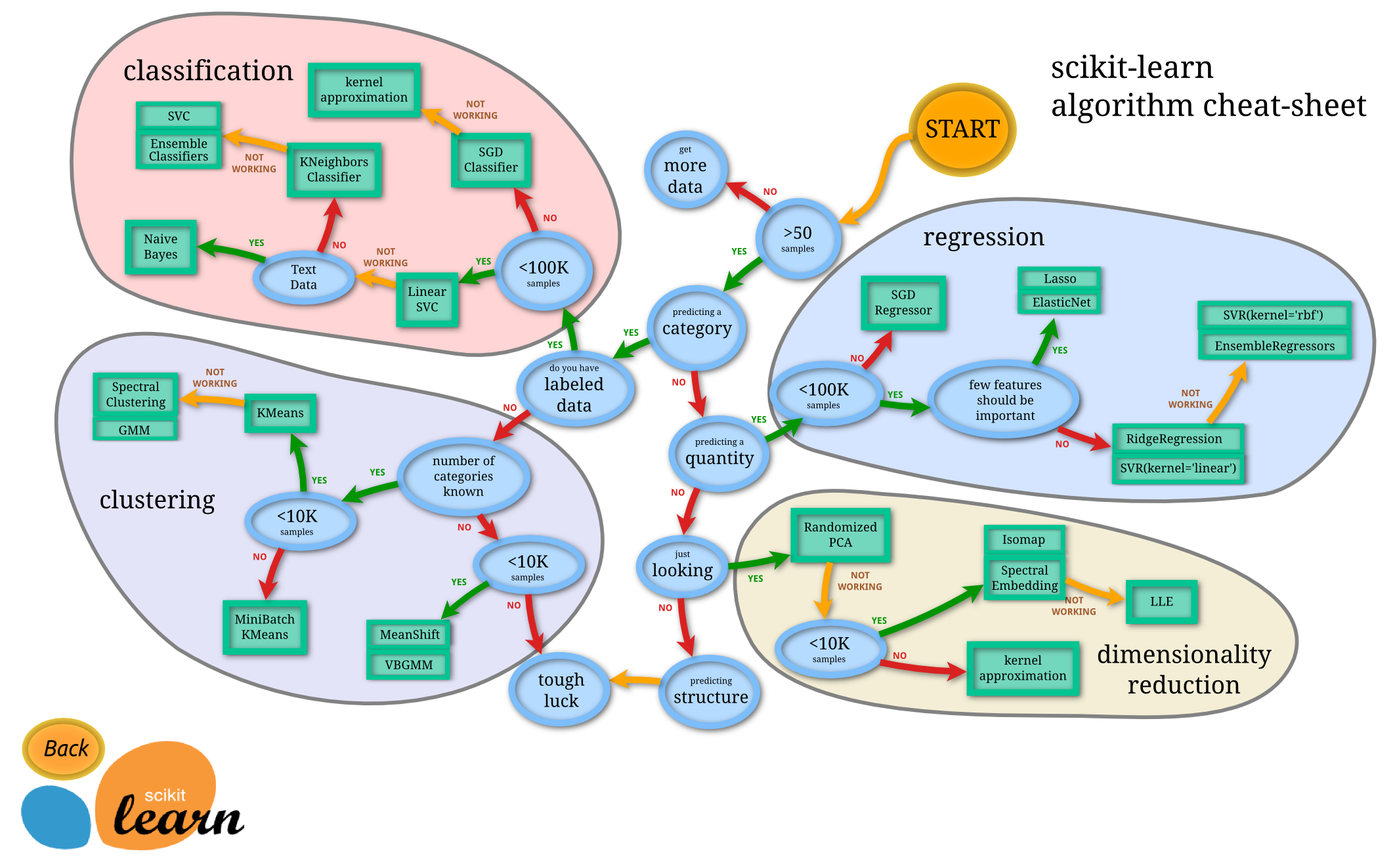

Toward selecting the right model, I need to determine what sort of variable we are predicting ($Y$). Some questions worth asking:

Do I have a label?

Yes, $Y$ takes on two values,

0and1indicating<=50kand>50krespectivelyIs the label discrete?

Yes, $Y$ exists in two states and not over a spectrum as a continuous variable

Do I have less than 100k observations?

Yes, I have 36,177 observations

Is this data textual?

No, this data is numerical and categorical defined specifically in their meanings (i.e. lacks the ambiguity of text data)

So, a model that predicts known categories (probability of known outcome, i.e. supervised learning with classification) is what I need.

SciKit-Learn offers this helpful decision path to guide me, but I will probably want to try other things as well.

4.2 Mod: Benchmark: Naive Bayes¶

The Naive Bayes Classifier will be used as a benchmark model for this work.

Bayes' Theorem is as such:

$$P\left(A|B\right) = \frac{P\left(B|A\right) \cdot P\left(A\right)}{P\left(B\right)}$$It is considered naive as it assumes each feature is independent of one another.

Bayes Theorem calculates the probability of an outcome (e.g. wether an individual recieves income exceeding 50k/yr), based on the joint probabilistic distributions of certain other events (e.g. any factors we include in the model).

As an example, I propose a model that always predicts an individual makes more than 50k/yr. This model has no false negatives; it has perfect recall (recall = 1).

Note: The purpose of generating a naive predictor is simply to show what a base model without any intelligence would look like. When there is no benchmark model set, getting a result better than random choice is a similar starting point.

4.2.1 Naive Bayes: Application¶

Since this model always predicts a 1:

- All true positives will be found (

1when1is true), equal to the sum of the label - False positives for this model are the difference between the number of all observations and those correctly predicted (

1when0is true) - No true negatives will be found (

0when0is true) as no0s are ever predicted - No false negatives are predicted (

0when1is true) as no0s are ever predicted

Note: I set $\beta = \frac{1}{2}$ as I want to penalize false positives being costly for CharityML. Recall the implications of setting the values of $\beta$ from before

Naive Predictor - Accuracy score: 0.2478, F-score: 0.2917

4.3 Mod: Model Application Pipeline¶

It can be useful to establish a routine for aspects related to modeling. This allows for standard comparison of outcomes generated from the same process.

4.4 Mod: Logistic Regression¶

Logistic regression produces probabilites of independent variables indicating a dependent variable. The outcome of logistic regression is bound between 0 and 1 (i.e. $ h_{\theta}\left(X\right) \in \left[0,1\right]$).

$$ h_{\theta}\left(X\right) = P\left(Y=1 | X\right)= \left\{ \begin{array}{ll} y=1 & \frac{1}{1+e^{-\left(\theta^{T}X\right)}} \\ y=0 & 1 - \frac{1}{1+e^{-\left(\theta^{T}X\right)}} \\ \end{array} \right. $$With a cost function of: $$ cost\left(h_{\theta}\left(X\right)\right) = \left(h_{\theta}\left(X\right)\right) \cdot \left(1 - h_{\theta}\left(X\right)\right)$$

Deriving and Minimizing the Cost Function: How does $ cost\left(h_{\theta}\left(X\right)\right) = \left(h_{\theta}\left(X\right)\right) \cdot \left(1 - h_{\theta}\left(X\right)\right)$, fall out of $\frac{1}{1+e^{-\left(\theta^{T}X\right)}}$ ?

The following math involves a knowledge of some single variable differential calculus, $y = x^{n} \rightarrow \frac{\Delta y}{\Delta x} = -n\cdot x^{n-1}$, and the chain rule, $\frac{\Delta}{\Delta x}f\left(g\left(x\right)\right)= f'\left(g\left(x\right)\right) \cdot g'\left(x\right)$:

$$h\left(x\right) = \frac{1}{1+e^{-x}}$$$$\frac{\Delta h\left(x\right)}{\Delta x} = \frac{\Delta}{\Delta x}\left(1+e^{-x}\right)^{-1}$$$$\because \frac{\Delta}{\Delta x}x^{n} = -n\cdot x^{n-1} \wedge \frac{\Delta}{\Delta x}f\left(g\left(x\right)\right)= f'\left(g\left(x\right)\right) \cdot g'\left(x\right) \implies$$$$\frac{\Delta}{\Delta x}\left(1+e^{-x}\right)^{-1} = -\left(1+e^{-x}\right)^{-2}\left(-e^{-x}\right) = \frac{-e^{-x}}{-\left(1+e^{-x}\right)^{2}} = \frac{e^{-x}}{\left(1+e^{-x}\right)} \cdot \frac{1}{\left(1+e^{-x}\right)}$$$$= \frac{\left(1+e^{-x}\right)-1}{\left(1+e^{-x}\right)} \cdot \frac{1}{\left(1+e^{-x}\right)} = \left(\frac{1+e^{-x}}{1+e^{-x}} - \frac{1}{1+e^{-x}}\right)\cdot \frac{1}{1+e^{-x}}$$$$= \left(1-\frac{1}{1+e^{-x}}\right) \cdot \frac{1}{1+e^{-x}} = \left(1-h\left(x\right)\right) \cdot h\left(x\right) \square$$LogisticRegression trained on 361 samples. LogisticRegression trained on 3617 samples. LogisticRegression trained on 36177 samples. Logistic Regression: Default

/Users/daiglechris/opt/anaconda3/lib/python3.7/site-packages/sklearn/linear_model/_logistic.py:940: ConvergenceWarning:

lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.041789 | 0.004817 | 0.903333 | 0.820785 | 0.833333 | 0.642790 |

| 1 | 0.030623 | 0.003460 | 0.863333 | 0.839027 | 0.705128 | 0.682561 |

| 2 | 0.442562 | 0.005428 | 0.873333 | 0.841902 | 0.730519 | 0.691478 |

CPU times: user 2.05 s, sys: 31 ms, total: 2.08 s Wall time: 549 ms

LogisticRegression trained on 361 samples. LogisticRegression trained on 3617 samples. LogisticRegression trained on 36177 samples. Logistic Regression: Mindful Parameters

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.006160 | 0.003227 | 0.896667 | 0.817800 | 0.808824 | 0.634809 |

| 1 | 0.013088 | 0.003877 | 0.860000 | 0.838695 | 0.696203 | 0.681541 |

| 2 | 0.205961 | 0.004662 | 0.876667 | 0.841680 | 0.740132 | 0.690949 |

CPU times: user 809 ms, sys: 15.3 ms, total: 824 ms Wall time: 257 ms

LogisticRegression trained on 361 samples. LogisticRegression trained on 3617 samples. LogisticRegression trained on 36177 samples. Logistic Regression: Mindful Parameters Sub

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.002247 | 0.002898 | 0.870000 | 0.797789 | 0.750000 | 0.570435 |

| 1 | 0.010326 | 0.003988 | 0.866667 | 0.810724 | 0.719178 | 0.615584 |

| 2 | 0.152398 | 0.002877 | 0.856667 | 0.812604 | 0.696429 | 0.620793 |

CPU times: user 743 ms, sys: 11.3 ms, total: 754 ms Wall time: 193 ms

LogisticRegressionCV trained on 361 samples. LogisticRegressionCV trained on 3617 samples. LogisticRegressionCV trained on 36177 samples. Logistic Regression with CV

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.061737 | 0.003454 | 0.883333 | 0.819569 | 0.786290 | 0.640684 |

| 1 | 0.638444 | 0.003556 | 0.863333 | 0.840464 | 0.705128 | 0.686254 |

| 2 | 7.846933 | 0.002949 | 0.873333 | 0.841791 | 0.730519 | 0.691130 |

CPU times: user 13.3 s, sys: 103 ms, total: 13.4 s Wall time: 8.58 s

4.5 Mod: Random Forest¶

NEED: WRITEUP, VISUALIZE

RandomForestClassifier trained on 361 samples. RandomForestClassifier trained on 3617 samples. RandomForestClassifier trained on 36177 samples. Random Forest: Default

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.164030 | 0.079762 | 1.000000 | 0.822001 | 1.000000 | 0.644699 |

| 1 | 0.309960 | 0.119150 | 0.996667 | 0.837811 | 0.988372 | 0.676572 |

| 2 | 3.997592 | 0.183971 | 0.983333 | 0.841570 | 0.975610 | 0.685871 |

CPU times: user 4.81 s, sys: 31.6 ms, total: 4.85 s Wall time: 4.87 s

RandomForestClassifier trained on 361 samples. RandomForestClassifier trained on 3617 samples. RandomForestClassifier trained on 36177 samples. Random Forest: Tuned

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.481433 | 0.212262 | 0.773333 | 0.752128 | 0.000000 | 0.000000 |

| 1 | 0.862287 | 0.305937 | 0.873333 | 0.840022 | 0.753968 | 0.699949 |

| 2 | 10.794462 | 0.474754 | 0.883333 | 0.854063 | 0.772059 | 0.727489 |

CPU times: user 13 s, sys: 81.7 ms, total: 13.1 s Wall time: 13.2 s

Initial Model:

Accuracy: 0.8416

F0.5-Score: 0.6859

Tuned Model:

Accuracy: 0.8595

F0.5-Score: 0.7379

Best Parameters:

{'min_samples_leaf': 5, 'n_estimators': 200}

CPU times: user 3min 50s, sys: 1.08 s, total: 3min 51s

Wall time: 3min 52s

Initial Model:

Accuracy: 0.8416

F0.5-Score: 0.6859

Tuned Model:

Accuracy: 0.8599

F0.5-Score: 0.7375

Best Parameters:

{'min_samples_leaf': 3, 'n_estimators': 250}

CPU times: user 3min 10s, sys: 644 ms, total: 3min 11s

Wall time: 3min 11s

Initial Model:

Accuracy: 0.8416

F0.5-Score: 0.6859

Tuned Model:

Accuracy: 0.8614

F0.5-Score: 0.7383

Best Parameters:

{'min_samples_leaf': 2, 'n_estimators': 250}

CPU times: user 6min 20s, sys: 5.48 s, total: 6min 25s

Wall time: 6min 34s

RandomForestClassifier trained on 361 samples. RandomForestClassifier trained on 3617 samples. RandomForestClassifier trained on 36177 samples. Random Forest: Gridded

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.260347 | 0.158871 | 0.923333 | 0.829298 | 0.875000 | 0.670554 |

| 1 | 0.589142 | 0.223911 | 0.913333 | 0.851410 | 0.839041 | 0.716216 |

| 2 | 8.200854 | 0.340266 | 0.910000 | 0.861360 | 0.852273 | 0.738287 |

CPU times: user 9.68 s, sys: 62.9 ms, total: 9.74 s Wall time: 9.79 s

4.6 Mod: Ada Boost¶

NEED: WRITEUP, VISUALIZE

AdaBoostClassifier trained on 361 samples. AdaBoostClassifier trained on 3617 samples. AdaBoostClassifier trained on 36177 samples. Ada Boost Classifier: Default

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.060788 | 0.116385 | 0.933333 | 0.827418 | 0.870253 | 0.656731 |

| 1 | 0.173964 | 0.111657 | 0.890000 | 0.847319 | 0.765625 | 0.700140 |

| 2 | 1.533097 | 0.091096 | 0.886667 | 0.860918 | 0.774648 | 0.738187 |

CPU times: user 2.04 s, sys: 48 ms, total: 2.09 s Wall time: 2.11 s

Initial Model:

Accuracy: 0.8609

F0.5-Score: 0.7382

Tuned Model:

Accuracy: 0.8700

F0.5-Score: 0.7568

Best Parameters:

{'learning_rate': 1.5, 'n_estimators': 400}

CPU times: user 2min 37s, sys: 1.93 s, total: 2min 39s

Wall time: 2min 39s

Initial Model:

Accuracy: 0.8609

F0.5-Score: 0.7382

Tuned Model:

Accuracy: 0.8701

F0.5-Score: 0.7564

Best Parameters:

{'learning_rate': 1.6, 'n_estimators': 800}

CPU times: user 13min 28s, sys: 8.21 s, total: 13min 37s

Wall time: 13min 39s

Initial Model:

Accuracy: 0.8609

F0.5-Score: 0.7382

Tuned Model:

Accuracy: 0.8701

F0.5-Score: 0.7564

Best Parameters:

{'learning_rate': 1.6, 'n_estimators': 800}

CPU times: user 14min 39s, sys: 7.63 s, total: 14min 47s

Wall time: 14min 48s

Initial Model:

Accuracy: 0.8609

F0.5-Score: 0.7382

Tuned Model:

Accuracy: 0.8701

F0.5-Score: 0.7564

Best Parameters:

{'learning_rate': 1.6, 'n_estimators': 850}

CPU times: user 14min 19s, sys: 5.38 s, total: 14min 24s

Wall time: 14min 25s

AdaBoostClassifier trained on 361 samples. AdaBoostClassifier trained on 3617 samples. AdaBoostClassifier trained on 36177 samples. Ada Boost Classifier: Tuned with RF Tuned Classifier

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 1.123698 | 0.644724 | 0.983333 | 0.824544 | 0.967262 | 0.651326 |

| 1 | 218.648478 | 68.045358 | 1.000000 | 0.821117 | 1.000000 | 0.639873 |

| 2 | 2739.484788 | 113.507644 | 0.980000 | 0.826976 | 0.963855 | 0.652946 |

CPU times: user 51min 54s, sys: 24.5 s, total: 52min 19s Wall time: 52min 22s

AdaBoostClassifier trained on 361 samples. AdaBoostClassifier trained on 3617 samples. AdaBoostClassifier trained on 36177 samples. Ada Boost Classifier: Tuned with RF Tuned Classifier

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 1.159131 | 0.647605 | 0.983333 | 0.823881 | 0.967262 | 0.649545 |

| 1 | 388.360656 | 116.305173 | 0.996667 | 0.817579 | 0.988372 | 0.632345 |

| 2 | 4738.337229 | 205.996747 | 0.970000 | 0.825650 | 0.937500 | 0.649914 |

CPU times: user 1h 29min 53s, sys: 46.7 s, total: 1h 30min 40s Wall time: 1h 30min 50s

AdaBoostClassifier trained on 361 samples. AdaBoostClassifier trained on 3617 samples. AdaBoostClassifier trained on 36177 samples. Ada Boost Classifier: Tuned

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.463919 | 0.775395 | 0.980000 | 0.801548 | 0.955882 | 0.595753 |

| 1 | 1.361193 | 0.883654 | 0.893333 | 0.853952 | 0.771605 | 0.715432 |

| 2 | 11.575145 | 0.774858 | 0.890000 | 0.869983 | 0.781250 | 0.756833 |

CPU times: user 15.6 s, sys: 195 ms, total: 15.8 s Wall time: 15.9 s

4.7 Mod: Gradient Boost¶

NEED: WRITEUP, VISUALIZE

GradientBoostingClassifier trained on 361 samples. GradientBoostingClassifier trained on 3617 samples. GradientBoostingClassifier trained on 36177 samples.

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.078174 | 0.021775 | 0.970000 | 0.830956 | 0.953125 | 0.668177 |

| 1 | 0.466704 | 0.018269 | 0.903333 | 0.854505 | 0.821429 | 0.723784 |

| 2 | 4.998712 | 0.018348 | 0.890000 | 0.863129 | 0.795455 | 0.744349 |

CPU times: user 5.56 s, sys: 33.8 ms, total: 5.59 s Wall time: 5.62 s

GradientBoostingClassifier trained on 361 samples. GradientBoostingClassifier trained on 3617 samples. GradientBoostingClassifier trained on 36177 samples. Gradient Boost Classifier: Tuned

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.381919 | 0.061622 | 1.000000 | 0.817910 | 1.000000 | 0.634016 |

| 1 | 2.161059 | 0.050139 | 0.923333 | 0.851410 | 0.849359 | 0.710313 |

| 2 | 24.331458 | 0.049148 | 0.893333 | 0.872637 | 0.797101 | 0.760491 |

CPU times: user 26.8 s, sys: 126 ms, total: 26.9 s Wall time: 27.1 s

GradientBoostingClassifier trained on 361 samples. GradientBoostingClassifier trained on 3617 samples. GradientBoostingClassifier trained on 36177 samples. Gradient Boost Classifier: Tuned

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 1.654364 | 0.480121 | 1.000000 | 0.818132 | 1.000000 | 0.634398 |

| 1 | 12.358908 | 0.296092 | 0.966667 | 0.843007 | 0.941358 | 0.685927 |

| 2 | 139.358182 | 0.329540 | 0.893333 | 0.870868 | 0.792254 | 0.755436 |

CPU times: user 1min 22s, sys: 727 ms, total: 1min 23s Wall time: 2min 34s

Initial Model:

Accuracy: 0.8631

F0.5-Score: 0.7443

Tuned Model:

Accuracy: 0.8698

F0.5-Score: 0.7544

Best Parameters:

{'learning_rate': 0.01, 'max_depth': 7, 'max_features': 95, 'min_samples_leaf': 3, 'n_estimators': 750}

CPU times: user 11h 23min 37s, sys: 2min 24s, total: 11h 26min 2s

Wall time: 12h 51min 42s

4.8 Mod: Extreme Gradient Boosting¶

NEED: WRITEUP, TUNING, VISUALIZE

XGBClassifier trained on 361 samples. XGBClassifier trained on 3617 samples. XGBClassifier trained on 36177 samples. Extreme Gradient Boost Classifier: Default

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.214545 | 0.046405 | 0.996667 | 0.813709 | 0.997024 | 0.624104 |

| 1 | 1.424718 | 0.052277 | 0.943333 | 0.848867 | 0.904605 | 0.700499 |

| 2 | 13.720626 | 0.046983 | 0.893333 | 0.872637 | 0.797101 | 0.758956 |

CPU times: user 15.3 s, sys: 77.7 ms, total: 15.4 s Wall time: 15.5 s

XGBClassifier trained on 361 samples. XGBClassifier trained on 3617 samples. XGBClassifier trained on 36177 samples. Extreme Gradient Boost Classifier: Tuned

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.627447 | 0.154005 | 1.000000 | 0.806412 | 1.000000 | 0.607339 |

| 1 | 7.082000 | 0.221744 | 0.993333 | 0.837922 | 0.985294 | 0.674581 |

| 2 | 68.581625 | 0.201561 | 0.920000 | 0.866998 | 0.856164 | 0.743605 |

CPU times: user 1min 16s, sys: 159 ms, total: 1min 16s Wall time: 1min 16s

Mod: 4.9 K-Nearest Neighbors¶

NEED: WRITEUP, TUNING, VISUALIZE

KNeighborsClassifier trained on 361 samples. KNeighborsClassifier trained on 3617 samples. KNeighborsClassifier trained on 36177 samples. KNN Classifier: Default

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.005465 | 0.523758 | 0.890000 | 0.795799 | 0.758929 | 0.584492 |

| 1 | 0.011615 | 2.421998 | 0.863333 | 0.814262 | 0.709459 | 0.625377 |

| 2 | 0.867722 | 12.719848 | 0.886667 | 0.816142 | 0.759494 | 0.629539 |

CPU times: user 16.4 s, sys: 82.5 ms, total: 16.5 s Wall time: 16.6 s

KNeighborsClassifier trained on 361 samples. KNeighborsClassifier trained on 3617 samples. KNeighborsClassifier trained on 36177 samples. KNN Classifier: SQRT Neighbors

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| 0 | 0.001331 | 0.385978 | 0.773333 | 0.752128 | 0.000000 | 0.000000 |

| 1 | 0.011717 | 4.172154 | 0.843333 | 0.825207 | 0.666667 | 0.656572 |

| 2 | 0.852093 | 39.302759 | 0.833333 | 0.827750 | 0.635246 | 0.661401 |

CPU times: user 45.3 s, sys: 210 ms, total: 45.5 s Wall time: 44.7 s

4.10 Comparison¶

NEED: VISUALIZATION

| train_time | pred_time | acc_train | acc_test | f_train | f_test | |

|---|---|---|---|---|---|---|

| GradientBoostingClassifier | 24.331458 | 0.049148 | 0.893333 | 0.872637 | 0.797101 | 0.760491 |

| XGBClassifier | 13.720626 | 0.046983 | 0.893333 | 0.872637 | 0.797101 | 0.758956 |

| AdaBoostClassifier | 11.575145 | 0.774858 | 0.890000 | 0.869983 | 0.781250 | 0.756833 |

| RandomForestClassifier | 8.200854 | 0.340266 | 0.910000 | 0.861360 | 0.852273 | 0.738287 |

| LogisticRegressionCV | 7.846933 | 0.002949 | 0.873333 | 0.841791 | 0.730519 | 0.691130 |

| KNeighborsClassifier | 0.852093 | 39.302759 | 0.833333 | 0.827750 | 0.635246 | 0.661401 |

The Gradient Boosting Classifer evaluates at the highest F-0.5 score of 0.76 on the test set. The Random Forest Classifier may be overfitting, seeing the F-0.5 score on the training set vs the testing set compated to the rest of the models.

An important task when performing supervised learning is determining which features provide the most predictive power.

By focusing on the relationship between only a few crucial features and the target label, I can simplify my understanding of the phenomenon, which is most always a useful thing to do.

In the case of this project, that means I wish to identify a small number of features that most strongly predict whether an individual makes at most or more than $50,000.

The top-5 factors in predicting if a person makes more than 50k annually are:

- marital status being a a married civilian spouse

- capital gain

- education number

- capital loss

- age

4.10.3 Comp: Reduced Feature Model Performance¶

I can now compare how the model performs when I remove all features than those that contribute the larges amount of prediction power.

Final Model trained on full data ------ Accuracy on testing data: 0.8726 F-score on testing data: 0.7605 Final Model trained on reduced data ------ Accuracy on testing data: 0.8573 F-score on testing data: 0.7286

The prediction power is reduced, but this model trained much faster than with all of the factors. Because the predictive power is already not incredibly strong, this reduction in prediction power for a gain in speed doesn't seem worth it. If the data were much larger, orders of magnitude, I may change my evaluation.

At this point, I prefer the model with all factors, even if it is a little slower than with only the 5 most influential factors.

... and that's it!

What did I do:¶

- Built a model to predict if a person makes more than 50k annually

How did I do it:¶

- Evaluated data from the census

- Recieved

- Examined distributions

- Evaluated skew

- Evaluated relationships between factors

- Examined correlations and Thiel's Uncertainty Coefficient

- Determined a metric to evaluate a model's performance given this problem

- F-0.5 Score prefering a high precision model

- Transformed the data

- Logarithmic, Normalized

- Split and reordered the data

- Ensured distribution of positive and negative classes were similar to those in the initial data

- Trained a number of models and selected the most predictive, given the metric

- Selected Gradient Boosting Classifier

- Tested the model with reduced features and determined I would stick with the fully featured model

Deploying the model by saving and tying it to a software solution for a customer could be a useful next step.